- Global Custody Pro

- Posts

- The Complete Guide to AI in Digital Asset Custody

The Complete Guide to AI in Digital Asset Custody

Where AI belongs in the custody of assets designed to eliminate intermediaries

This is the second instalment in our new series for Q1 2026. The first article, “The Complete 2026 Guide to AI in Global Custody”, achieved some of our highest email engagement ever. If implementing AI is part of your KPIs this year - share this email with a colleague now so they’re on the same page. We only grow through word of mouth and referrals.

Table of Contents

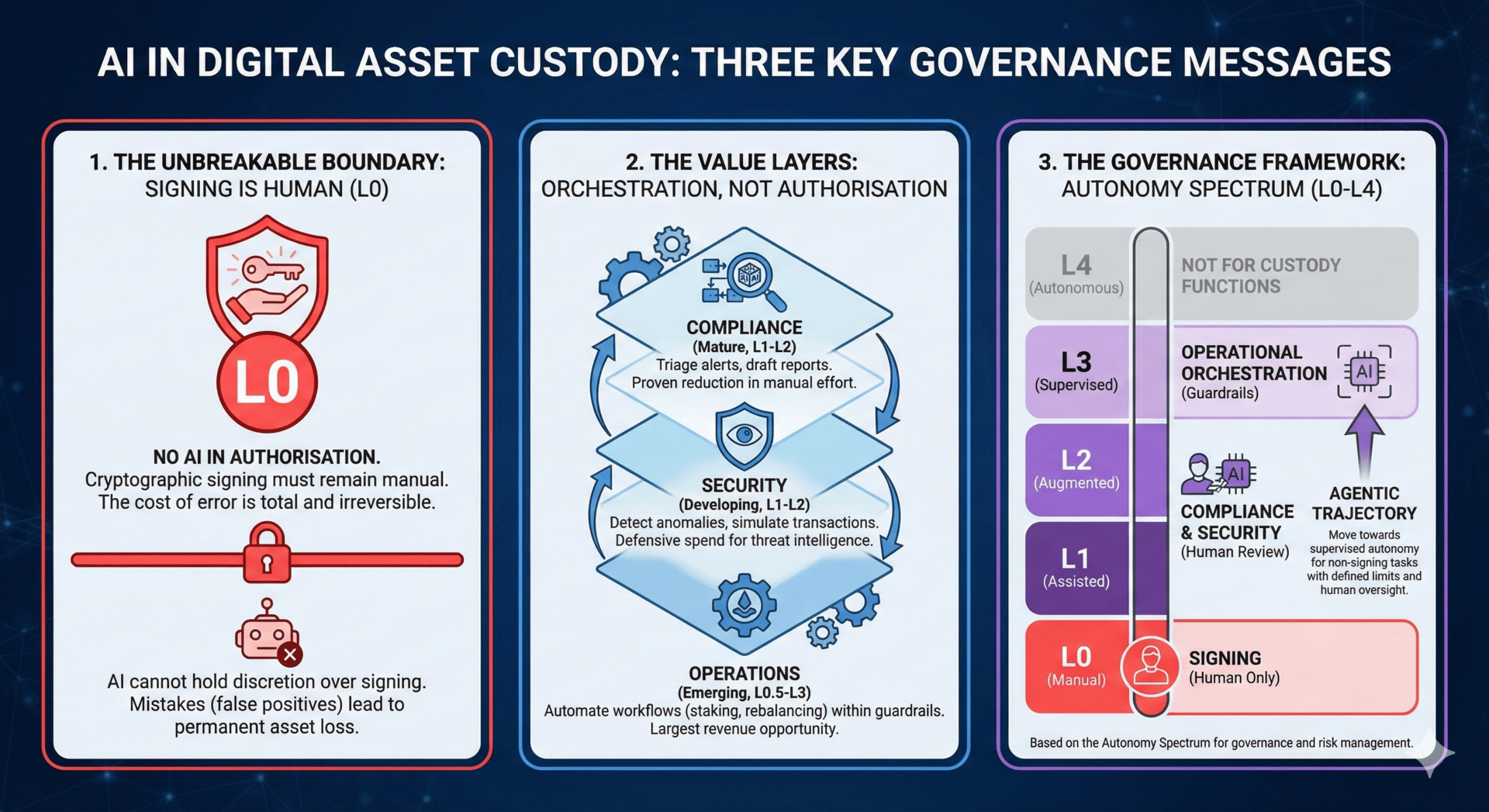

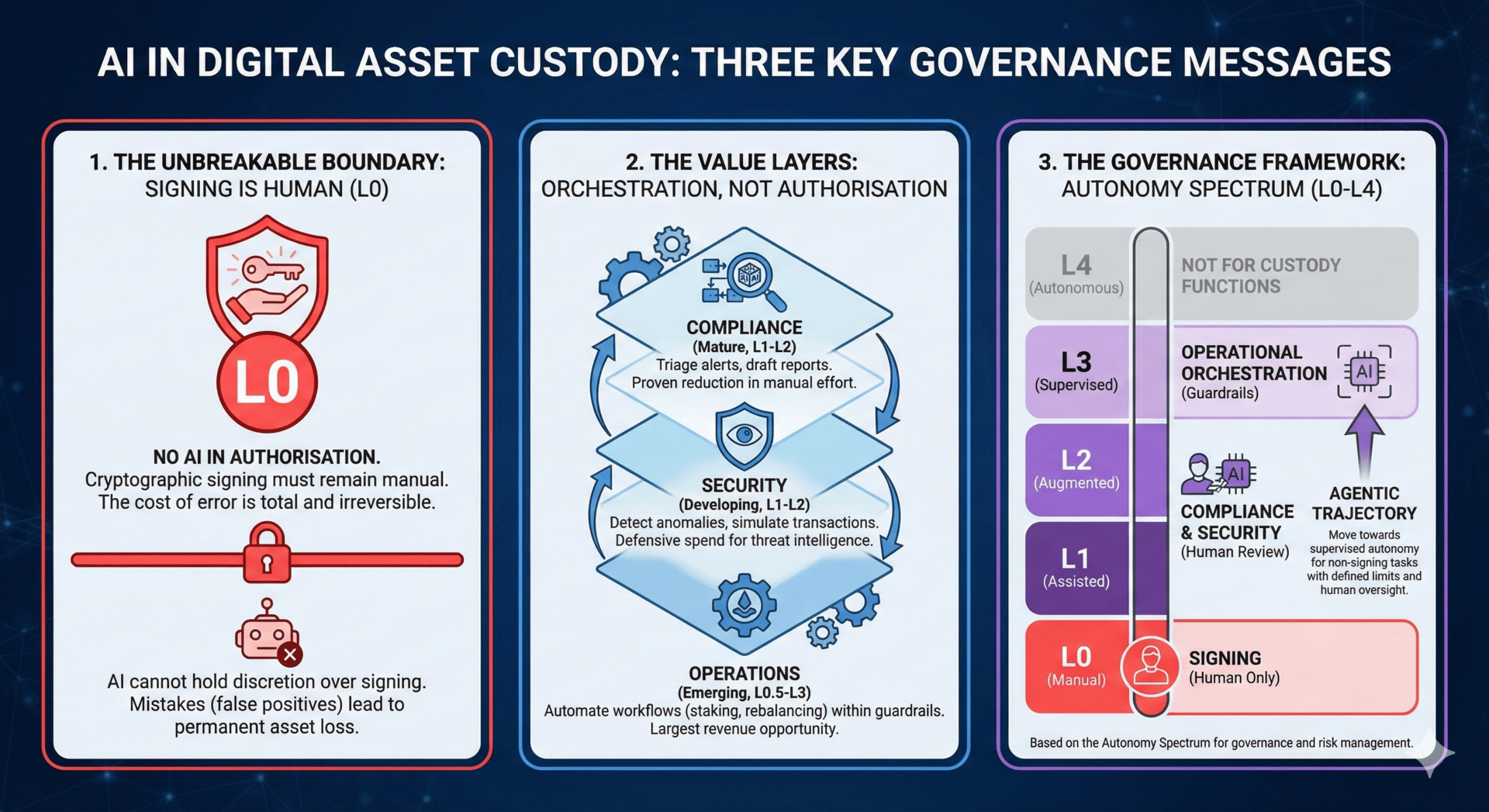

AI in digital asset custody belongs in the layers surrounding cryptographic signing, not in the signing itself. Compliance investigation, security monitoring, and operational automation can all benefit from machine learning. Transaction authorisation cannot. The irreversibility of blockchain transactions makes this boundary non-negotiable: a compliance false positive wastes analyst hours, but a signing false positive loses client assets permanently.

This distinction matters because the major security incidents in digital asset custody share a pattern. In February 2025, approximately $1.5 billion was lost from Bybit when attackers compromised a developer environment to manipulate signing keys. In March 2022, approximately $625 million was lost from the Ronin Bridge when attackers used social engineering to compromise validator private keys. In both cases, the cryptographic primitives were not broken. The failures occurred in key management and the human processes surrounding transaction authorisation.

Institutional adoption is accelerating despite these risks. Coinbase holds approximately $300 billion in digital assets as of Q3 2025, including custody for eight of eleven spot Bitcoin ETFs. BitGo secures $90.3 billion. Fireblocks has processed over $10 trillion in cumulative volume across 2,300 institutional clients. These institutions are not holding keys themselves. They are hiring custodians, accepting the intermediary in exchange for segregated accounts, audit trails, and regulatory compliance.

If your compliance team is still manually triaging the majority of transaction alerts, your cost base and response times are now structurally uncompetitive. Blockchain analytics providers have offered AI-enhanced monitoring for several years and the capability has matured through multiple product cycles. Your crypto-native competitors are using it.

The Operating Environment

Digital asset custody means controlling the cryptographic private keys that authorise blockchain transactions. Lose the key, lose the asset. Compromise the key, lose the asset. There is no custodian of last resort and no settlement system to reverse errors. This constraint shapes every decision about where AI can and cannot operate.

The core infrastructure reflects this reality. Multi-party computation distributes key shares so no single entity possesses the complete private key. Hardware security modules store keys in tamper-resistant hardware. Policy engines govern what can be signed and under what conditions: transaction limits, whitelist enforcement, multi-signature requirements, time-based controls. These systems are deterministic and rule-based. They should stay that way.

The market has split between crypto-native custodians and traditional players entering the space. Coinbase, BitGo, Fireblocks, and Anchorage built technology stacks purpose-built for digital assets. BNY launched digital asset custody in October 2022 and now administers BlackRock's BUIDL tokenised fund. State Street partnered with Taurus. Northern Trust co-founded Zodia Custody with Standard Chartered. Citi targets 2026 for its platform launch.

Both groups face the same operational challenges. Readers from securities services will recognise these workflows; the difference is irreversibility.

The Same Problems, Different Assets

The workflows in digital asset custody will look familiar to anyone who has run traditional securities operations.

Consider corporate actions. In traditional custody, an announcement arrives from a German issuer overnight. It is written in German, references local securities law, and requires translation, validation against multiple data sources, client notification, and election processing within 24 hours. The operations team races against a deadline to interpret ambiguous information and execute correctly.

In digital custody, a fork announcement arrives on X (Twitter), Github, and a Medium post. The operations team must evaluate technical stability, assess market capitalisation and liquidity, determine whether to support the new chain, communicate the decision to clients, and execute. The timeline is often shorter. The information sources are less structured. But the workflow is the same: interpret ambiguous external information, make a decision, execute accurately, document everything.

Major custodians publish formal fork and airdrop policies with evaluation criteria typically covering technical stability, market capitalisation thresholds, liquidity, and operational cost. The SEC's December 2025 broker-dealer custody guidance addresses policies for forks and airdrops, formalising what was already operational necessity.

Staking income requires calculation and allocation across client accounts with different fee structures and tax treatments. This parallels income collection and allocation in securities custody. Compliance investigation means triaging transaction alerts and filing suspicious activity reports, the same AML and sanctions screening that traditional custody teams know well.

The tools developed for exception handling in traditional custody offer useful analogues. State Street's Alpha AI Data Quality reduced exception counts from 31,000 to 4,000 over six months in securities operations while catching 100% of genuine issues. SmartStream's Smart Agents use domain-trained AI to triage breaks and learn from human analysts. The problem structure is similar across asset classes: high volume, mostly noise, occasional genuine issues requiring human judgment. These are not proof that identical tools will work for digital assets, but they demonstrate that the approach transfers when the underlying workflow matches.

The Autonomy Spectrum

AI deployments in custody operations exist along a spectrum of autonomy. Understanding where each use case sits on this spectrum, and where it should sit, is essential for governance and risk management.

L0 (Manual): Humans perform all tasks. AI plays no role. This is where transaction signing must remain. The cost of error is total and irreversible.

L1 (Assisted): AI provides information and suggestions. Humans make all decisions and execute all actions. Example: AI flags a transaction as potentially suspicious; analyst investigates and decides whether to file a report.

L2 (Augmented): AI drafts outputs and proposes actions. Humans review, approve, and authorise execution. Example: AI pre-populates a suspicious activity report with transaction details and risk rationale; analyst reviews, edits if necessary, and submits.

L3 (Supervised): AI decides and executes within defined guardrails. Humans monitor outcomes and handle exceptions. Example: AI automatically rebalances hot wallet funding when balances fall below threshold; operations team reviews daily summaries and investigates anomalies.

L4 (Autonomous): AI operates independently. Humans intervene only for exceptions or policy changes. No custody function should operate at this level given current technology and regulatory expectations.

Most AI deployments in traditional custody operate at L1 or L2. BNY's digital employees, which propose actions for human approval, operate at L2. State Street's Alpha AI Data Quality, which flags exceptions for human review, operates at L1-L2. The same ceilings apply to digital asset custody.

The Critical Boundary

The governance question for each use case is: what autonomy level is appropriate given the consequences of error? But in digital asset custody, there is a harder constraint that overrides this analysis.

Any function that can directly cause an on-chain state change must have a human authorisation gate. This means the signing decision, the moment when a transaction is approved for broadcast to the blockchain, must remain at L0. No AI system should have discretion over whether a transaction is signed.

This does not prevent AI from operating at higher autonomy levels for functions that do not control signing. The distinction is between authorisation and orchestration. Authorisation, deciding whether to sign, stays at L0. Orchestration, preparing transactions, optimising parameters, managing workflows, can reach L2 or L3 if the signing step itself remains human-controlled or governed by deterministic policy without machine learning discretion.

Consider hot wallet rebalancing. An AI system at L3 could monitor balances, determine that a transfer is needed, calculate the optimal amount and timing, and queue the transaction. But the transaction itself would either require human approval before signing or execute through a deterministic policy engine that permits only pre-approved transfer types within pre-approved limits. The AI orchestrates. It does not authorise. If your architecture gives an AI system the ability to cause a signed transaction to be broadcast based on its own judgment, you have exceeded the L2 ceiling for on-chain actions, regardless of what you call the function.

Three Layers, Three Maturity Levels

The three layers where AI adds value are at different stages of maturity and appropriate for different autonomy levels.

Compliance: Mature and Proven (L1-L2)

Compliance investigation is the most mature use case, and the one where you should start if you have not already. The problem is identical to traditional custody: high volumes of alerts, most of them false positives, requiring human investigation and potential regulatory reporting.

At L1, AI flags suspicious transactions and analysts investigate each one. At L2, AI pre-populates case files with transaction graphs, entity information, and draft narratives; analysts review and approve. The shift from L1 to L2 is where the efficiency gains compound. Building a case file from scratch takes hours. Reviewing and editing a pre-populated file takes minutes.

The market has validated solutions. Independent research using seized server data as ground truth has found leading blockchain analytics providers achieving false positive rates below 1% with high coverage of known illicit addresses. Chainalysis data has been admitted in US federal court proceedings. TRM Labs and Elliptic offer competing products with different strengths: TRM has strong US government relationships; Elliptic has deeper DeFi protocol coverage and stronger presence in European markets.

Before selecting a provider, answer three questions. What is your current false positive rate? What reduction would justify the investment? Which provider performs best against your specific transaction profile? If you cannot answer the first question, you do not have the baseline to evaluate AI tools. Instrument your current process before you buy.

Security: Real but Uneven (L1-L2)

Security monitoring is less mature than compliance but accelerating. The tools exist. Custodian adoption and outcomes remain opaque.

At L1, AI detects anomalies and alerts the security team. At L2, AI provides threat context and recommended response actions; humans investigate and execute. Pre-signing simulation occupies a specific place in this framework: simulation as an advisory control, where AI analyses a transaction and presents findings for human review, operates at L1. Automatic blocking, where AI can prevent a transaction from proceeding without human intervention, would operate at L3 and requires careful governance given the potential for both security benefit and operational disruption.

Hexagate, now part of Chainalysis, provides pre-signing simulation across dozens of blockchain networks. Zodia Custody has partnered with CUBE3.AI for real-time threat intelligence. Coinbase has disclosed that AI supports almost every aspect of its security operations, though specifics on custody operations are not public.

The threat environment is intensifying. Chainalysis estimated $3.4 billion stolen in 2025, up 55% from 2024, with North Korea's Lazarus Group accounting for $2.02 billion. The attack pattern is increasingly supply chain compromise and social engineering rather than cryptographic attacks. AI can help with anomaly detection and behavioural monitoring, but independently validated detection rates for these attack vectors are not publicly available.

Security AI is a defensive spend. You are not reducing analyst hours; you are reducing the probability of catastrophic loss. That makes business cases harder to build but does not make the investment less important.

Operations: Largest Opportunity, Earliest Stage

Operational automation has the clearest business case but the least mature tooling.

Current wallet management is rule-based and operates at what might be called L0.5: automated but not intelligent. Fireblocks' Gas Station automatically replenishes native tokens when balances fall below threshold. Round-robin algorithms balance withdrawal requests across hot wallets. These are deterministic systems executing predefined logic, not AI systems learning from data.

The opportunity is in staking, and the economics can be substantial. Staking yields vary significantly by network, validator performance, and market conditions, but typically range from 3% to 5% annually for major proof-of-stake networks. For institutional custodians, staking represents a meaningful revenue line beyond safekeeping fees, with economics depending on stakeable asset mix, client mandates, and competitive fee structures.

AI applications are developing across the staking value chain. Validator selection could operate at L2: AI analyses uptime history, fee structures, and slashing incidents to recommend validators; humans approve the selection. Reward calculation and allocation could approach L3: AI automatically reconciles rewards across client accounts; humans review exception reports. Slashing risk prediction operates at L1: AI identifies validators showing early warning signs; humans decide whether to unstake.

The regulatory path is becoming clearer. The SEC clarified in May 2025 that protocol staking is administrative rather than a securities transaction. The IRS established a safe harbour for ETP trusts. But no custodian has disclosed AI-driven staking optimisation in production. The tools are being built. Deployment is lagging.

The Agentic Trajectory

The industry is moving toward agentic AI: systems that can execute multi-step workflows with minimal human oversight. In traditional custody, BNY describes its AI systems as digital employees with defined roles, managers, and escalation protocols. These systems operate at L2-L3: they can complete tasks autonomously within guardrails, escalating to humans when they encounter situations outside their training.

In digital asset custody, agentic capabilities will likely emerge first in operational functions where errors are recoverable and, critically, where the agent orchestrates but does not authorise. An agent that monitors gas prices, predicts optimal transaction timing, and queues non-urgent transactions for batch processing could operate at L3, provided the actual signing remains human-controlled or governed by deterministic policy. An agent that manages hot wallet rebalancing across multiple chains, preparing transfers within predefined limits, could operate at L3 under the same constraint.

Compliance and security will be slower to reach L3. The consequences of missed threats or incorrect filings create regulatory and reputational risk that most institutions will want humans to own. And signing will remain at L0 indefinitely. An agentic system that could approve transactions autonomously would represent an unacceptable concentration of risk, regardless of how sophisticated its guardrails.

The path from current state to supervised autonomy requires investment in three areas: data infrastructure to give agents the information they need, policy frameworks to define guardrails and escalation triggers, and monitoring systems to detect when agents are operating outside expected parameters. Custodians building these capabilities now will be positioned to deploy agentic systems as the technology matures.

Implementation Realities

Multi-chain complexity makes AI deployment harder than in traditional custody. BlackRock's BUIDL fund operates across multiple blockchains. Coinbase supports hundreds of cryptocurrencies. Each chain has unique consensus mechanisms, transaction formats, and fee structures. AI systems must either generalise across chains or be trained separately for each. Neither approach is straightforward.

Regulatory fragmentation adds difficulty. The GENIUS Act established a US federal stablecoin framework in July 2025. The OCC has taken actions enabling digital asset firms to operate with national bank status. MiCA reached full application in the EU. Cold storage requirements vary by jurisdiction, typically ranging from 90% to 98% of client assets. The EU AI Act becomes effective in August 2026, adding governance requirements for AI systems. Custodians operating globally must build for multiple regimes.

Disclosure asymmetry is the core problem for competitive benchmarking. Vendors publish capabilities. Custodians do not publish what they deploy or what outcomes they achieve. BNY's Eliza platform supports over 100 AI solutions across the firm, but none are specified as digital-asset-focused. State Street's Alpha AI reduces false positives in traditional custody; equivalent capabilities for digital assets have not been disclosed.

Talent scarcity constrains execution. The intersection of blockchain expertise, AI capability, and institutional custody knowledge is small. Traditional custody professionals lack crypto-native experience. Crypto-native professionals often lack governance and audit rigour. AI engineers lack domain knowledge in either. Building teams that bridge all three takes time.

What We Know and What We Do Not

Chainalysis attribution accuracy has been tested in court proceedings. Regulatory frameworks for custody and staking are codified. Core signing infrastructure remains deterministic and should stay that way.

We do not know custodian-specific false positive rates for transaction monitoring. We do not know the ratio of automated to human decisions in alert triage. We do not know assets under custody for several major players, making market share analysis unreliable. We do not know what AI, if any, traditional custodians are deploying specifically for their digital asset operations.

Several vendor claims are self-reported without independent verification. Detection rates, time savings, and efficiency improvements cited in marketing materials should be evaluated against your own baseline rather than accepted at face value.

The agentic AI trajectory is early. No custodian has disclosed agentic systems operating at L3 for any digital asset function. The realistic near-term ceiling is L2: AI proposes, humans approve.

Where to Start

Sequence matters.

Start with compliance. The tools are mature, the ROI is measurable, and implementation risk is low. Instrument your current false positive rate. Evaluate providers against your transaction profile. Deploy, measure the reduction, and use the results to build credibility for subsequent investments. Target L2: AI pre-populates case files, analysts review and approve.

Move to staking operations second. This is where the revenue upside is largest. The regulatory framework is becoming clearer. The complexity of validator selection, reward calculation, and cross-protocol comparison justifies automation. Expect build-heavy integration even if you buy components, since production-ready AI tools for staking optimisation are not yet widely available. Target L2 for validator selection, with L3 possible for reward calculation once you have confidence in the system.

Treat security as continuous investment. The threat environment is worsening. Pre-signing simulation and behavioural monitoring should be part of your architecture even though they will not produce the same measurable ROI as compliance triage. Target L1-L2: AI detects and recommends, humans investigate and respond.

Do not touch signing. The boundary between AI-assisted operations and transaction authorisation must be documented, audited, and enforced. AI can orchestrate. It cannot authorise. If your architecture allows AI systems to cause a signed transaction to be broadcast based on machine learning judgment, you have created a vulnerability that no efficiency gain can justify.

Governance as Competitive Advantage

The pattern from Bybit, Ronin, and other major incidents is consistent: cryptographic primitives work, human processes fail. AI can strengthen those human processes. It can triage alerts, detect anomalies, optimise validator selection, and automate documentation. It cannot be allowed discretion over transaction signing.

The firms that deploy AI in the right layers, with documented autonomy limits and clear escalation protocols, will reduce costs, improve accuracy, and capture the staking revenue opportunity. Those that move too slowly will lose ground to competitors who have already automated compliance operations. Those that move too fast, giving AI systems any role in signing decisions, will discover that blockchain errors do not have a remediation process.

The autonomy spectrum provides the governance framework. For each function, define the appropriate level. Document the rationale. Build the monitoring to detect drift. Enforce the boundary between orchestration and authorisation. The custodians that get this right will not just be more efficient. They will be more trustworthy. In a market where institutional adoption depends on trust, that is the competitive advantage that matters.

About the Author

Brennan McDonald writes about AI transformation and change at brennanmcdonald.com and publishes the Global Custody Pro newsletter.

Advisory

The AI Change Leadership Intensive helps custody and operations leaders diagnose adoption constraints and accelerate operating model change. The programme uses the 5C Adoption Friction Model to identify specific intervention points and build momentum with resistant stakeholder groups.

Subscribe to the newsletter for weekly insights on leading AI transformational change: brennanmcdonald.com/subscribe

Sources

Sources range from regulatory filings and company disclosures to vendor case studies and market research. Self-reported performance metrics should be validated against your own operational baseline.

Regulatory Documents

SEC Division of Corporation Finance, Statement on Protocol Staking (May 2025)

GENIUS Act, Public Law (July 2025)

OCC Interpretive Letters and Charter Actions (2025)

EU Markets in Crypto-Assets Regulation (MiCA)

SEC Broker-Dealer Custody Guidance (December 2025)

Company Disclosures

Coinbase Global Inc. Q3 2025 Earnings Release

BitGo Platform Documentation (2025)

Fireblocks Platform Documentation (2025)

BNY Digital Asset Platform Announcements (2022-2025)

State Street/Taurus Partnership Announcement (2024)

Research and Analysis

Chainalysis Crypto Crime Report (2025)

EY-Parthenon Institutional Investor Survey (January 2025)

Key Terms

Agentic AI: AI systems that can execute multi-step workflows with minimal human oversight, making decisions and taking actions within defined guardrails. Distinguished from traditional AI by the capacity to act, not just analyse or generate.

AI Agent: A software system that uses AI to perceive its environment, make decisions, and take actions to achieve goals. In custody, agents might resolve exceptions, manage wallet balances, or optimise staking, escalating to humans for decisions outside their authority.

Human-in-the-Loop: A design pattern where humans review and approve AI decisions before execution. Standard in L1-L2 deployments. At L3, human involvement shifts to exception handling and monitoring.

Guardrails: Constraints that limit what an AI agent can do. In custody, guardrails include transaction value limits, approved counterparty lists, permitted asset types, and mandatory escalation triggers.

Tool Use: The ability of an AI agent to interact with external systems, APIs, and data sources. Enables agents to query balances, check transaction status, access market data, and execute operations as part of completing tasks.

Autonomy Level (L0-L4): Framework for classifying AI deployment maturity. L0: human only. L1: AI assists. L2: AI proposes, human approves. L3: AI acts within guardrails, human monitors. L4: fully autonomous. In digital asset custody, any function that can cause an on-chain state change must have a human authorisation gate, limiting such functions to L2 or below.

Article prepared January 2026. All figures as at Q3 2025 unless otherwise noted.