- Global Custody Pro

- Posts

- Agentic AI in Post-Trade

Agentic AI in Post-Trade

Why Most AI Projects Fail and What to Do About It

This is the fourth instalment in our new series for Q1 2026. Share this email with a colleague now so they’re on the same page. We only grow through word of mouth and referrals.

Table of Contents

You know the pattern. The technology works. The pilots succeed. Then something happens between proof-of-concept and production, and the initiative stalls.

Budgets get reallocated. Sponsors move on. The AI team is quietly absorbed into other functions. According to the MIT NANDA Initiative, 95% of generative AI pilots fail to achieve revenue acceleration. Gartner predicts more than 40% of agentic AI projects will be cancelled by 2027. Deloitte reports that while 38% of organisations are piloting agentic solutions, only 11% have systems in production. Definitions of "pilot," "agentic," and "production" vary across these studies, which limits direct comparison. But the signal is consistent: there is a wide gap between pilot success and sustained production value.

If this sounds familiar, the question is why. The conventional explanation blames technology: models hallucinate, data quality is poor, legacy systems resist integration. These challenges are real. But they do not explain why most projects stall.

The technology is not the problem.

This article examines an alternative hypothesis: that the real blocker is often organisational, not technical. Diagnosing which friction point is in the way may matter more than selecting the right vendor or model.

Post-trade reflects these patterns. Global custodians are expanding AI teams, budgets, and platform capabilities. BNY reports 117 AI solutions in production as at Q3 2025. State Street has operationalised its Alpha AI Data Quality platform. Northern Trust, according to a December 2025 press release, has reduced custody tax operations from eight hours to thirty minutes using AI analytics. Yet the gap between pilot and production remains wide.

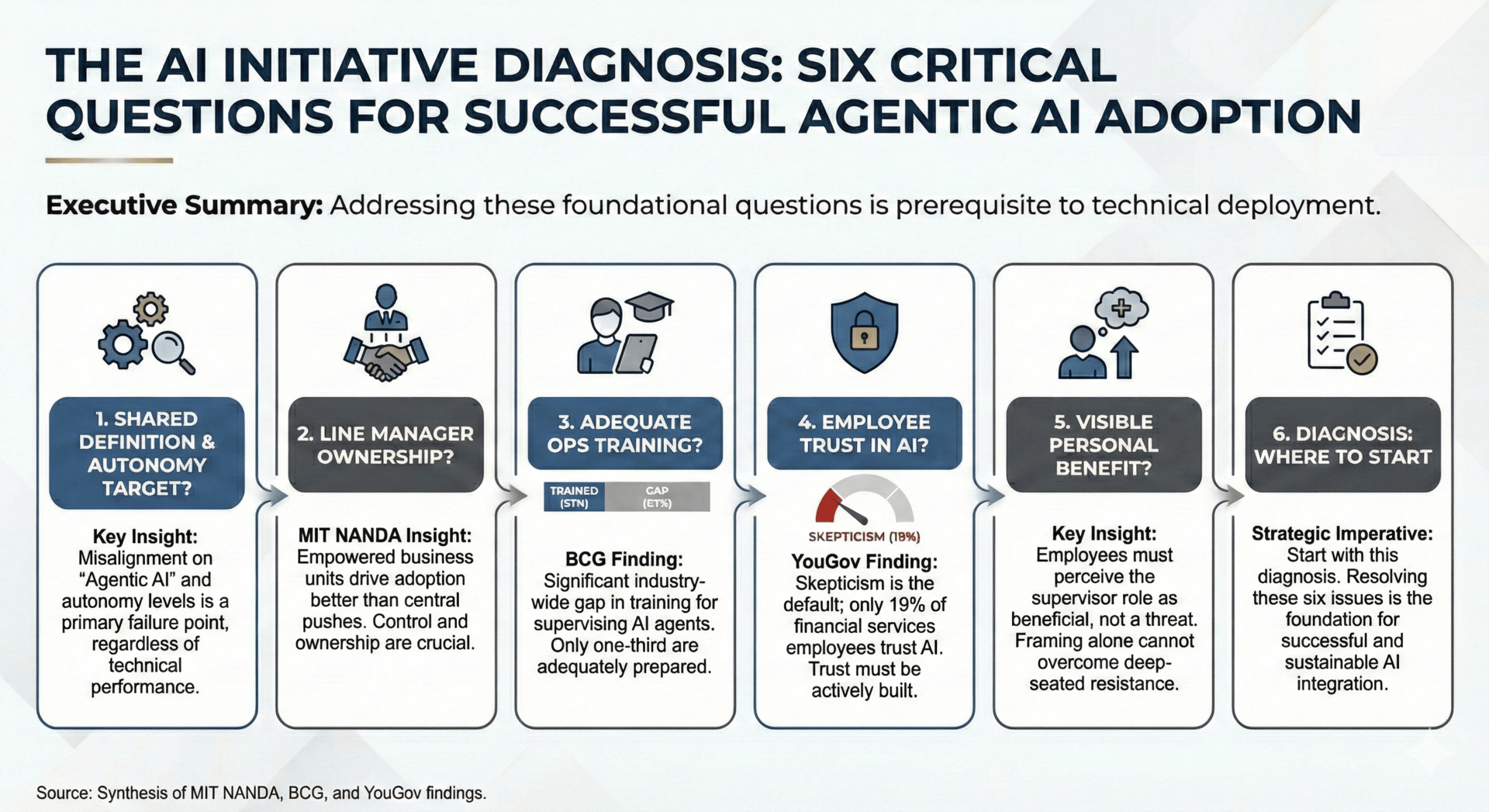

Research points to two friction points that merit early attention: clarity about the autonomy target, and control over the workflows being transformed. The MIT NANDA Initiative found that line manager empowerment tracks with success. State Street's insourcing decision before AI deployment reinforces control as foundational. Deloitte's finding that 42% of organisations lack a defined agentic strategy points to clarity. The remaining three friction points (capability, credibility, and consequences) may become binding only after these first two are addressed.

The Definitional Problem

There is no consensus definition of what agentic AI actually means.

Gartner describes it as artificial intelligence systems that take action-based roles, operating independently or in collaboration with humans. IDC Research Director Heather Hershey sets a higher bar: autonomous systems that can independently plan, reason, make decisions, and execute multi-step tasks with minimal human intervention.

That gap affects what buyers expect. Many products currently marketed as "agentic" are closer to traditional automation wrapped in a conversational interface. Industry observers have a term for this: agent-washing. The confusion is reflected in recent commentary:

"I've seen many 'agentic AI' products in the past twelve months that, upon further investigation, were AI co-pilots or LLM wrappers on conventional machine learning. There was no 'agent' in the 'agentic AI'. Buyers will need to arm themselves with the knowledge of what agentic AI is and what it is not in order to avoid overpaying for features that cannot perform as promised." — Heather Hershey, Research Director, IDC (2025)

"Most agentic AI projects right now are early-stage experiments or proof of concepts that are mostly driven by hype and are often misapplied." — Anushree Verma, Analyst, Gartner (June 2025)

A Proposed Autonomy Framework (L1-L4)

One way to cut through this confusion is to classify AI systems by their level of autonomy. This framework is a practical alignment tool, not an industry standard. Its value is in forcing explicit conversation about autonomy targets.

Level 1 (Assisted): AI generates recommendations only. No system writes. Humans review outputs and decide whether to act.

Tests: (1) Does the AI write to any system of record? (2) Can the AI execute without human approval? If both answers are no, it is L1.

Level 2 (Supervised Autonomy): AI executes system writes (tickets, messages, repairs, data updates) within enforced guardrails. Mandatory human review thresholds and audit trails.

Tests: (1) Does the AI write to systems of record? (2) Are there defined guardrails with human review triggers? If both answers are yes, it is L2.

Level 3 (Conditional Autonomy): AI proposes and executes novel multi-step plans across tools. Human intervention by exception only.

Tests: (1) Can the AI chain multiple actions without per-action approval? (2) Does it handle novel scenarios not pre-defined in rules? If both answers are yes, it is L3.

Level 4 (Full Autonomy): AI operates without human oversight across all scenarios. Not a current goal for custody operations.

Why does this matter? Because vendor claims often conflate Level 1 and Level 2 capabilities with Level 3 and Level 4 aspirations. When buyers expect autonomous agents but receive assisted tools, the initiative gets classified as failure even if the technology performed exactly as designed.

For a detailed examination of how AI is currently deployed across custody operations, see The Complete Guide to AI in Global Custody, the first article in this series.

Where Post-Trade Actually Stands

There is a gap between agentic AI rhetoric and post-trade reality. Understanding what firms have publicly disclosed, versus what vendors claim, provides context.

What "Production" Means

In custody and post-trade, "production" typically means: (1) integrated into core workflow, not a side tool; (2) clear ownership across operations and technology; (3) defined escalation, audit, and rollback procedures; (4) monitored error rates with incident playbooks; (5) measurable improvement sustained over multiple months. Claims of production deployment should be tested against these criteria.

BNY: Disclosed Supervised Autonomy

BNY has been among the most transparent disclosers of AI deployment among major custodians.

Disclosed: BNY operates 117 AI solutions in production as at Q3 2025 (earnings release). Over 100 digital employees work on payment validations and code repairs (CEO statement). 98% of employees have been trained on generative AI; 20,000 are actively building agents (company disclosures). The NAV calculation agent achieved a 40% reduction in false positives; legal contract review time decreased by 25% (company-reported, not independently verified).

Inference: Based on the "trainer and nurturer" framing and defined escalation protocols, these deployments operate at Level 2 (Supervised Autonomy). Chief Data and AI Officer Sarthak Pattanaik described the shift: "Now, instead of handling certain tasks in the first instance, the role of the human operator is to be the trainer or the nurturer of the digital employee."

BNY's approach addresses multiple friction points: the 98% training rate targets Capability; the "trainer and nurturer" framing targets Consequences; the defined escalation protocols address Credibility by building trust in the system's guardrails.

Not publicly disclosed: Human review rates, rollback procedures, error budgets, audit scope, and incident frequency. Most custodians do not disclose operational AI metrics at this level of granularity.

State Street: The Case for Operational Control

State Street's approach offers a different lesson about prerequisites for AI deployment.

Disclosed: The firm insourced operations previously outsourced to vendors in India (Tahiri statement, June 2025). The Alpha AI Data Quality system reduced false positive exceptions from over 31,000 to approximately 4,000 over six months while maintaining detection of genuine issues (company-reported).

Executive Vice President and Chief Operating Officer Mostapha Tahiri explained the rationale at the Fortune COO Summit in June 2025: "Why would you insource more people in an era of AI?" His answer: operational control is a prerequisite for change. Third-party arrangements create friction due to compliance complexity, cultural misalignment, and coordination costs. When you outsource an operation, you also outsource your ability to transform it.

Inference: The insourcing decision suggests operational control was viewed as prerequisite for AI deployment. The principle (you cannot transform operations you do not control) holds regardless of specific numbers. This move directly addresses Control, establishing operational ownership as prerequisite for transformation.

Not publicly disclosed: Cost of insourcing, timeline, scope of operations affected, and current AI deployment status in insourced functions. This is standard practice for strategic operational decisions.

For detailed analysis of AI in settlement and clearing operations, see AI in Clearing and Settlement, the third article in this series.

Disclosure Gaps

Despite extensive industry coverage, no major custodian has publicly disclosed a fully autonomous agentic system (Level 3 or above) in production custody operations. The most advanced deployments disclosed operate with human supervision.

"It depends on what you say an agent is, what you think an agent is going to accomplish and what kind of value you think it will bring. It's quite a statement to make when we haven't even yet figured out ROI on LLM technology more generally." — Marina Danilevsky, Senior Research Scientist, IBM (November 2025)

Other major custodians, including JPMorgan, Citi, and Northern Trust, have disclosed less about their AI initiatives. Direct comparison is difficult, and BNY's transparency may not be representative of industry practice.

Dictate prompts and tag files automatically

Stop typing reproductions and start vibing code. Wispr Flow captures your spoken debugging flow and turns it into structured bug reports, acceptance tests, and PR descriptions. Say a file name or variable out loud and Flow preserves it exactly, tags the correct file, and keeps inline code readable. Use voice to create Cursor and Warp prompts, call out a variable like user_id, and get copy you can paste straight into an issue or PR. The result is faster triage and fewer context gaps between engineers and QA. Learn how developers use voice-first workflows in our Vibe Coding article at wisprflow.ai. Try Wispr Flow for engineers.

Why AI Projects Fail

The failure statistics are sobering. But understanding why projects fail is harder than counting them. Research points to several categories of adoption friction that may distinguish successful implementations from abandoned initiatives.

1. Clarity

Deloitte reports that 42% of organisations are still developing their agentic strategy roadmap, with 35% having no formal strategy at all. When leadership expects autonomous agents but operations teams expect assisted tools, the disconnect registers as project failure regardless of technical performance.

Without shared clarity, every stakeholder evaluates the initiative against different expectations. The autonomy framework above exists precisely to force this conversation: are we building Level 1 or Level 2? What would Level 3 require? Agreement on the target is prerequisite for meaningful progress assessment.

2. Control

The MIT NANDA Initiative found that empowering line managers rather than just central AI labs to drive adoption tracks with success. According to the study, vendor purchases succeed approximately 67% of the time, while internal builds succeed only about 22%.

When AI initiatives are driven top-down without business unit sponsorship, adoption stalls. State Street's insourcing decision exemplifies this at the operational level: the firm sought direct control over processes before attempting to transform them.

3. Capability

According to BCG's 2025 survey, only one-third of financial services employees have received adequate training in generative AI. The AICPA and CIMA's December 2025 survey found that 88% of respondents believe AI will be the most transformative technology trend over the next 12 to 24 months, but only 8% feel their organisation is "very well prepared."

BNY's response has been heavy investment in capability. According to company disclosures, 98% of employees have received generative AI training and approximately 20,000 employees are actively building AI agents through the Eliza platform.

4. Credibility

A December 2025 YouGov survey found that only 19% of Americans trust AI in financial services, while 48% say they do not. According to the Edelman Trust Barometer, among those who already distrust AI, 67% feel it is being "forced" upon them.

Trust cannot be mandated. When executives push AI adoption faster than employees find comfortable (and the IBM CEO Study found that 60% of banking and financial markets CEOs acknowledge doing exactly this), the result is compliance rather than genuine adoption.

5. Consequences

The Edelman Trust Barometer Flash Poll from November 2025 contains a finding worth attention. The survey covered respondents across five countries (Brazil, China, Germany, UK, US); results may vary by geography and organisation type.

When organisations frame AI adoption around job security (telling employees their jobs are safe and AI will not replace them), only 26% embrace the technology. When organisations reframe around job transformation (how AI helps employees do their current jobs better), the embrace rate rises to 43%.

Framing may matter as much as substance. Teams that can see personal benefit from becoming agent supervisors adopt more readily. Teams that perceive threat, even implicit threat, resist.

But framing works only when it matches practice. Training, role definitions, and career paths must align with the story being told. Messaging without structural change reads as manipulation.

BNY's approach reflects this. Chief Information Officer Leigh-Ann Russell stated that "while productivity is a metric, 'joy' and employee satisfaction are equally important. AI removes the drudgery from work." The firm positions human operators as "trainers and nurturers" of digital employees rather than workers being displaced.

AI will affect employment. Morgan Stanley analysis projects that European banks will cut approximately 200,000 jobs over the next five years, with back-office, middle-office, and operational roles most exposed (reported in Financial Times, "European banks set to cut 200,000 jobs as AI takes hold," December 2025). Successful framing requires acknowledging change while emphasising transformation rather than displacement.

For analysis of how AI is transforming digital asset custody specifically, see AI in Digital Asset Custody, the second article in this series.

Governance Considerations

Regulatory frameworks for agentic AI are emerging but not yet fully defined.

The European Union AI Act achieves full enforcement for high-risk AI systems on 2 August 2026. Credit scoring, which touches custody operations through collateral valuation and counterparty assessment, is classified as high-risk. Non-compliance carries penalties of up to 7% of global annual turnover.

In the United States, Federal Reserve SR Letter 11-7 establishes model risk management requirements. While specific guidance on agentic AI has not been issued, these requirements would reasonably apply to AI systems.

Research from the Federal Reserve Bank of Richmond, published in October 2025, found that banks with higher AI intensity incur greater operational losses than their less AI-intensive counterparts, driven by external fraud, customer problems, and system failures. But the researchers noted: "AI's negative effect is especially pronounced for banks that lack strong risk management, suggesting that robust internal control is an important prerequisite."

Governance may enable adoption rather than constrain it. Teams that trust the controls around AI systems adopt more readily than teams that perceive ungoverned risk.

This discussion is not legal advice. Firms should confirm regulatory obligations with counsel and relevant supervisory authorities.

Limits of the Current Evidence

Intellectual honesty requires acknowledging the limits of current evidence.

No verified Level 3+ deployments exist in production post-trade operations. The most advanced publicly disclosed systems operate with human supervision. Claims of fully autonomous custody agents remain aspirational or vendor-driven based on available disclosures.

Quantified ROI from custodian agentic AI has not been independently verified. BNY reports efficiency metrics, but these are company-disclosed figures. Whether improvements derive from agentic autonomy or Level 1-2 assistance is often unclear from public disclosures.

Regulatory enforcement under the EU AI Act is untested. The Act does not achieve full enforcement until August 2026.

Which friction point matters most remains an open question. The categories of friction likely interact in ways that vary by organisation. Diagnosing the binding constraint requires assessment, not assumption.

The evidence base for change management approaches is still developing. The studies cited in this article represent early findings that may or may not generalise.

The Path Forward

The realistic near-term model for agentic AI in post-trade is supervised autonomy: agents that handle routine tasks independently while escalating complexity to humans. This is not a failure of ambition. It fits an operational context where errors are not tolerable.

Global custody involves safeguarding nearly $200 trillion in assets. A hallucination in a NAV calculation, a misclassified corporate action, or a failed settlement instruction can trigger regulatory breaches, client losses, and reputational damage. BNY's digital employee framework, with defined escalation protocols, fits this context.

The path forward is probably not better technology. The technology works at Level 2 and shows promise at Level 3. The path forward is figuring out what is actually in the way.

Questions Worth Asking

Do your stakeholders share a common definition of what agentic AI means and what level of autonomy you are targeting? Misalignment here registers as project failure regardless of technical performance.

Are your line managers empowered to drive adoption, or is AI being pushed from central functions without business unit ownership? The MIT NANDA finding on vendor purchases versus internal builds suggests control counts.

Have your operations teams received adequate training to supervise AI agents? The BCG finding that only one-third have received adequate training suggests this is an industry-wide gap, not a failing of any particular firm.

Do your employees trust the AI systems you are deploying? The YouGov finding that only 19% of Americans trust AI in financial services suggests scepticism is the default, not the exception.

Can your employees see personal benefit from becoming agent supervisors? If they perceive only threat, framing alone will not resolve resistance.

That diagnosis is where most initiatives should start.

If you found this analysis useful

I write about AI transformation with more of a change management focus at brennanmcdonald.com. Subscribe to the newsletter for weekly analysis of how the industry is handling these challenges.

If you are facing a stalled initiative

The 5C Adoption Friction Model described in this article is the diagnostic framework I use with operations and technology teams. If you want to explore whether this approach might help identify what is blocking your initiative, you can book a conversation here.

The author previously spent 12 years working in technology and change roles in financial services, most recently at a global custodian.

Sources

Primary Sources

BNY Q3 2025 Earnings Release (16 October 2025)

State Street Q3 2025 10-Q Filing and Earnings Call (17 October 2025)

Northern Trust Press Release (9 December 2025)

EU AI Act Full Text and Implementation Timeline

FSOC Annual Report (December 2024)

Executive Statements

Robin Vince, CEO, BNY: Q3 2025 Earnings Call (October 2025)

Leigh-Ann Russell, CIO & Global Head of Engineering, BNY: AI in Finance Interview (2025)

Sarthak Pattanaik, Chief Data & AI Officer, BNY: OpenAI Case Study (2025)

Mostapha Tahiri, EVP & COO, State Street: Fortune COO Summit (June 2025)

Marina Danilevsky, Senior Research Scientist, IBM (November 2025)

Anushree Verma, Analyst, Gartner (June 2025)

Heather Hershey, Research Director, IDC (2025)

Research and Industry Reports

MIT NANDA Initiative (August 2025)

Gartner Agentic AI Predictions (June 2025, August 2025)

Deloitte Emerging Technology Trends Study (December 2025)

Edelman Trust Barometer Flash Poll: Trust and Artificial Intelligence (November 2025)

YouGov AI Trust Survey (December 2025)

BCG AI Training Survey (2025)

AICPA/CIMA Future-Ready Finance Survey (December 2025)

IBM CEO Study: Banking and Financial Markets (2024)

Federal Reserve Bank of Richmond: AI and Operational Losses (October 2025)

Financial Times, "European banks set to cut 200,000 jobs as AI takes hold" (December 2025), reporting Morgan Stanley analysis

Related Articles in This Series

Glossary

Agentic AI: Artificial intelligence systems that take action-based roles, operating independently or in collaboration with humans to achieve specific goals.

Agent-washing: A term used by industry observers for the practice of marketing AI products as "agentic" without delivering autonomous capabilities.

Digital employee: BNY's term for AI agents treated as colleagues with identities, managers, and defined roles, operating within guardrails with escalation protocols.

Autonomy Spectrum (L1-L4): A proposed framework for classifying AI systems by level of autonomous operation, from assisted (L1) through supervised autonomy (L2), conditional autonomy (L3), to full autonomy (L4). This is a practical alignment tool, not an industry standard.

5C Adoption Friction Model: A diagnostic framework identifying five categories of potential barriers to AI adoption: Clarity, Control, Capability, Credibility, and Consequences.

MIT NANDA Initiative: Research initiative at MIT studying AI adoption patterns in enterprise settings.

Article prepared January 2026. All figures as at Q3 2025 unless otherwise noted. Company-reported figures have not been independently verified.